Team Jun 17, 2023 No Comments

Artificial Intelligence (AI) has emerged as a transformative field that encompasses the development of intelligent machines capable of performing tasks that typically require human intelligence. With the rapid advancements in technology and the increasing reliance on data-driven solutions, the demand for AI experts has skyrocketed in recent years. This guide aims to provide a comprehensive overview of the AI career landscape, the skills and qualifications required to excel in this field, educational pathways for aspiring AI professionals, and practical steps to embark on a successful AI journey.

AI can be broadly classified into two categories: Narrow AI and General AI. Narrow AI, also known as Weak AI, focuses on specific tasks and is designed to excel in a particular domain. Examples of narrow AI applications include virtual assistants like Siri and Alexa, recommendation systems, and image recognition algorithms. On the other hand, General AI, often referred to as Strong AI, aims to possess human-level intelligence and can perform a wide range of intellectual tasks. While General AI remains an aspiration, much of the current AI development revolves around Narrow AI.

Machine learning (ML) forms the backbone of many AI applications. It is a subset of AI that enables systems to learn and improve from experience without being explicitly programmed. ML algorithms are designed to analyze large volumes of data, detect patterns, and make predictions or decisions based on the identified patterns. Deep learning, a subfield of ML, uses neural networks with multiple layers to process complex data and extract high-level abstractions. Deep learning has revolutionized areas such as computer vision, natural language processing, and speech recognition.

The field of AI is constantly evolving, with new trends and breakthroughs shaping its future. Some of the emerging trends in AI research and development include:

Explainable AI: As AI systems become more complex, there is a growing need for transparency and interpretability. Explainable AI aims to provide insights into the decision-making process of AI algorithms, enabling humans to understand and trust the results.

Reinforcement Learning: This approach focuses on training AI agents to make decisions and take actions in dynamic environments. Reinforcement learning has shown promising results in autonomous driving, robotics, and game playing.

Generative Adversarial Networks (GANs): GANs consist of two neural networks, a generator and a discriminator, that compete against each other. GANs have gained attention for their ability to generate realistic and high-quality synthetic data, opening avenues for applications in image synthesis, video generation, and creative design.

To excel in the field of AI, professionals need a combination of technical skills, domain knowledge, and critical thinking abilities. Some core technical skills required for AI professionals include:

Programming Languages: Proficiency in programming languages such as Python, R, and Java is essential for implementing AI algorithms, manipulating data, and building AI models.

Statistics and Probability: A strong foundation in statistics and probability theory is crucial for understanding the mathematical principles underlying AI algorithms and evaluating their performance.

Linear Algebra and Calculus: Concepts of linear algebra and calculus are fundamental to grasp the inner workings of AI models, especially in areas like optimization and gradient descent.

Data Visualization and Manipulation: The ability to visualize and manipulate data using tools like Pandas and Matplotlib is important for data preprocessing, exploratory data analysis, and presenting insights effectively.

Understanding machine learning algorithms, neural networks, and deep learning architectures are also key components of an AI expert’s skill set. Additionally, possessing soft skills such as critical thinking, problem-solving, communication, and collaboration is invaluable in the AI field, enabling professionals to approach complex problems with a holistic perspective.

The best courses for AI careers offer a range of options for individuals interested in pursuing a career in this field. Some of the common pathways include:

Bachelor’s Degree Options for AI: Many universities offer undergraduate programs in AI, computer science, or related fields that provide a solid foundation in AI concepts and techniques. These programs typically cover topics such as algorithms, data structures, statistics, machine learning, and programming languages.

Master’s Degree Programs in AI and Related Fields: Pursuing a master’s degree in AI or a related field can provide in-depth knowledge and specialization. Programs like Master of Science in Artificial Intelligence delve into advanced topics such as natural language processing, computer vision, robotics, and reinforcement learning.

Ph.D. Programs in AI and Research Opportunities: For those aspiring to contribute to AI research and development, pursuing a Ph.D. in AI or a closely related field is a common path. Ph.D. programs offer opportunities to conduct cutting-edge research, collaborate with leading experts, and push the boundaries of AI knowledge.

Certifications for AI Skill Development: Ivy Professional School provides the best Artifical Intelligence Certification Courses. These programs provide flexibility and accessibility, allowing individuals to attend offline classes or learn at their own pace via live online classes and acquire specific AI skills or specialize in subfields like NLP, computer vision, or reinforcement learning.

Importance of Lifelong Learning and Staying Updated: AI is a rapidly evolving field, and staying updated with the latest developments is crucial for AI professionals. Engaging in continuous learning through attending workshops, webinars, and conferences, as well as reading research papers and joining online AI communities, ensures professionals remain at the forefront of AI advancements.

To build a strong foundation in AI, aspiring experts can focus on the following areas:

Gaining Programming Proficiency: Proficiency in programming is a cornerstone skill for AI professionals. Learning a language like Python, which is widely used in AI, provides the flexibility and tools necessary for AI development. Libraries and frameworks like TensorFlow and PyTorch are essential for implementing AI models efficiently.

Mastering Statistical Concepts and Algorithms: Statistics and probability theory are fundamental for understanding the mathematical principles underlying AI algorithms. Concepts such as hypothesis testing, regression, classification, and Bayesian inference are vital for analyzing data and making informed decisions.

Understanding Neural Networks and Deep Learning: Neural networks are the building blocks of many AI applications. Familiarity with different types of neural networks, including CNNs and RNNs, allows professionals to design and train models for tasks such as image recognition, natural language processing, and sequence generation.

Training and Fine-Tuning Models: AI experts must have the ability to train and fine-tune models using large datasets. Techniques such as regularization, optimization algorithms, and hyperparameter tuning play a critical role in improving model performance and generalization.

AI offers various exciting specializations that professionals can explore based on their interests and career goals. Some popular AI specializations include:

Natural Language Processing (NLP): NLP focuses on enabling computers to understand, interpret, and generate human language. Applications of NLP include machine translation, sentiment analysis, chatbots, and voice recognition systems.

Computer Vision: Computer vision aims to enable machines to analyze and interpret visual data, such as images and videos. It has applications in autonomous vehicles, object detection, facial recognition, and medical imaging.

Robotics and Automation: Robotics combines AI with mechanical engineering to design and develop intelligent machines capable of performing physical tasks. Robotic process automation (RPA) involves automating repetitive tasks using software bots.

Reinforcement Learning: Reinforcement learning involves training AI agents to make decisions base on trial and error interactions with an environment. It has applications in autonomous robotics, game playing, and optimization problems.

Predictive Analytics and Data Science: Predictive analytics utilizes AI techniques to analyze historical data and make predictions about future outcomes. It is widely used in fields such as finance, marketing, and healthcare for decision-making and forecasting.

Gaining practical experience is essential for aspiring AI experts to apply their knowledge and hone their skills. Some avenues to gain practical experience include:

AI Internships and Industry Projects: Internships provide valuable hands-on experience in real-world AI applications. Joining a company or research institution as an AI intern allows individuals to work on projects, collaborate with professionals, and gain industry exposure.

Contributing to Open-Source AI Projects: Open-source AI projects provide an opportunity to contribute to the AI community and learn from experienced developers. By participating in open-source projects, individuals can enhance their coding skills, collaborate with a global community, and showcase their abilities.

Participating in Kaggle Competitions: Kaggle is a popular platform for data science and AI competitions. Competing in Kaggle challenges allows individuals to solve real-world problems, experiment with different AI techniques, and learn from the broader Kaggle community.

Building Personal AI Projects and Portfolios: Undertaking personal AI projects helps individuals showcase their skills and creativity. By developing their own AI applications, individuals demonstrate their ability to conceptualize, implement, and deliver AI solutions.

AI expertise opens up a wide range of job opportunities across various industries. Some common AI roles include:

AI Engineer: AI engineers design, develop, and deploy AI models and applications. They work on data preprocessing, feature engineering, model selection, and optimization to create AI solutions that solve specific problems.

Data Scientist: Data scientists leverage AI techniques to extract insights from data and develop predictive models. They analyze large datasets, create algorithms, and develop data-driven strategies to solve complex business problems.

Machine Learning Engineer: Machine learning engineers focus on implementing and optimizing machine learning algorithms. They work on training models, fine-tuning hyperparameters, and deploying AI solutions into production environments.

AI Research and Development Positions: Research-oriented positions involve pushing the boundaries of AI knowledge through exploration and experimentation. These roles are often found in academic institutions, research labs, and specialized AI companies.

Emerging AI career paths and job prospects continue to evolve with the rapid advancement of technology. Professionals can explore opportunities in areas like explainable AI, AI ethics, AI project management, and AI consulting. Salary expectations in the AI field vary based on factors such as experience, location, industry, and job level. AI salaries are generally competitive due to the high demand for skilled professionals and the transformative nature of the field.

Becoming an AI expert requires a combination of technical skills, domain knowledge, practical experience, and continuous learning. The AI field offers exciting opportunities for individuals passionate about cutting-edge technology, data analysis, and problem-solving. By following educational pathways, gaining practical experience, networking, and staying updated with the latest trends, aspiring AI experts can embark on a rewarding career in this rapidly evolving field. The demand for AI professionals will likely continue to grow as AI becomes increasingly integrated into industries and transforms the way we live and work.

Team Apr 26, 2023 No Comments

In the world of data science, things change and evolve very quickly. If you do not remain updated with the latest happenings and trends in data science, you will definitely fall behind. With new developments emerging constantly, it can be challenging to keep up with the latest advancements. There are several strategies that data scientists can use to stay informed about the data science trends in 2023.

Books have always been the traditional source of gaining knowledge, and they remain relevant even now. Books are a great way to learn a new subject, even if they may not always be the easiest or fastest to read. Some of the most experienced data scientists and researchers have written books on data science that will remain relevant and important forever. They are a great way to remain updated about the latest trends in data science.

Authors of textbooks are usually experienced in their fields and provide the information needed in a chronological fashion. Though information is easily available these days, it is important to consume information chronologically to make more sense of it.

Textbooks generally provide a more detailed and comprehensive exploration of a subject than blogs or articles, or a whitepaper. Using textbooks to gain knowledge on various topics in data science becomes particularly important when you are trying to build a strong foundation for a specific subject.

Some top books you can go for inlcude:

Online courses and certifications provide a convenient way to learn new skills and technologies in data science. Such courses cover a wide range of topics, including machine learning, data visualization, and deep learning, and are taught by industry experts and academics. Numerous online courses and certifications such as Udacity, Coursera, Udemy, edX cover a wide range of topics, from the basics of data science to advanced machine learning techniques which are building the future of data science.

If you want to upgrade your data science skills, Ivy Professional School’s data science courses are the best option. Through these courses, students receive top-notch training from experienced faculty from esteemed institutions such as IITs, IIMs, and US universities and have the opportunity to work on real-world analytics problems through capstone projects and internships.

The courses offer the flexibility of attending in-person or online live classes. Ivy provides lifetime placement assistance to its students, ensuring they have access to the right resources to achieve their career goals.

Some of the most popular data science courses from Ivy Professional School include:

Once you are working in the data science domain, following thought leaders and experts in the data science community on social media platforms such as LinkedIn, Twitter, and Medium is a great way to stay informed about the latest trends in data science and AI. Experts often share valuable insights, knowledge, updates and data science news. Social media gives the option of interacting with them by commenting on their posts as well.

Some of the leaders you can follow include Geoffrey Hinton, Yann LeCun, Andrew Ng, Fei-Fei Li, Ian GoodFello, Andriy Burkov, Demis Hassabis, Cassie Kozyrkov, Andrej Karpathy, Alex Smola, among others.

If you prefer audio-visual learning, YouTube is a great option to stay updated with the latest technologies and get data science news. For data science courses, statistical and mathematical concepts, and detailed tutorials on programming, YouTube has free lectures from leading institutes such as MIT, Stanford, Harvard, Oxford, and Princeton, which is a great way to gain knowledge in a subject in a cost-efficient and time-saving manner.

If you are a beginner or even an experienced professional who wants to learn new concepts, Ivy Professional School’s YouTube channel can greatly help you. It covers detailed videos on various topics in Python, SQL, PowerBi, Tableau, Advanced Excel, and new technologies.

Some of our popular playlists include:

Check out Ivy’s videos here.

Attending industry events is a great way to stay informed latest data science trends. By attending sessions and workshops, you can learn about the latest tools, techniques, and frameworks being used in the industry and interact with other professionals in the field. You can also network with others, leading to new job opportunities.

Joining online communities such as Kaggle, Data Science Central can help you connect with other data scientists, participate in discussions, and learn from others’ experiences. In these forums, you can ask questions, share your knowledge, and collaborate on projects.

Experimenting with new tools, technologies, and frameworks can help you stay ahead of the curve, learn through practical experience, and remain updated with the latest trends in data science. By experimenting with latest technologies in data science, you can also discover new approaches and projects to solve problems and gain insights that can be applied in your work.

Staying up-to-date with the latest happenings and data science trends in 2023 is essential for professionals to remain competitive and relevant. By remaining informed, you can make better decisions, provide more effective solutions, and make a great future in data science.

Team Apr 20, 2023 No Comments

Data science has emerged as one of the most promising career paths in recent years. And why not. A data science career allows you to work with cutting-edge technologies, get lucrative remuneration and directly impact a business through data driven decisions.

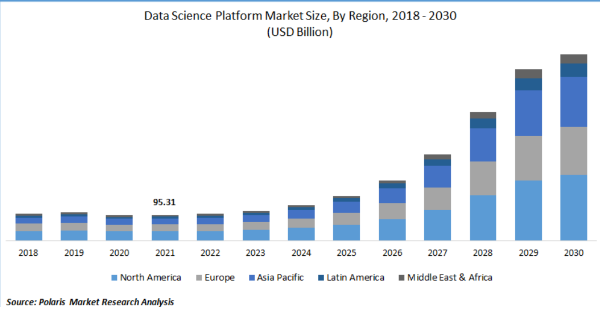

In fact a report states that the global data science platform market was valued at USD 95.31 billion in 2021 and is expected to grow at a CAGR of 27.6% during the forecast period. As the demand for data driven business decisioning will increase, the companies will require more and more people with capabilities to derive meaningful insights from data. This will increase the availability of jobs in data science.

Source: Polaris Market Research

There is an exponential growth of data generated by businesses, organizations, and individuals, creating a huge demand for data scientists and analysts. Data scientists come with the capabilities to analyze and interpret complex data sets, figure out patterns and insights, and use this information to make informed business decisions.

They are crucial in the organization, and their output directly impacts business decisions. The demand for jobs in data science is expected to continue to grow in the coming years. A data science career path is quite sought after for people with strong mathematics, statistics, and computer science background.

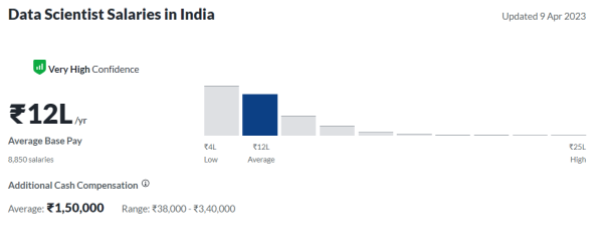

Data science career is considered one of the most lucrative career paths in the current job market. The demand for skilled data scientists is at an all-time high, and the salaries and benefits offered to data scientists clearly showcase the demand. The data scientist career path depends on several factors, such as years of experience, location, and industry.

Image Source: Glassdoor

As data scientists can work in a variety of industries, it gives them a wide range of job opportunities and the ability to explore different industries and career paths. As the demand for data-driven decision-making continues to grow, we can expect to see the demand for skilled data scientists soar.

A data scientist’s work has a significant impact on businesses, organizations, and society. A data scientist works on complex data sets and identify patterns and insights that help businesses make informed decisions. A data scientist working in healthcare analyzes patient data to figure out patterns that can eventually lead to more effective treatments and better outcomes.

A data scientist working in finance handles and analyzes customer data that can help to prevent frauds or improve the customer experience. A data science acreer offers opportunities to work on meaningful projects that have a real impact on the world.

Data scientists work on cutting-edge technologies as they need to analyze and interpret complex data sets. Data scientists are often at the forefront of technological advancements, developing and implementing innovative solutions to solve complex business problems.

Data scientists use many tools and technologies, including programming languages like Python, R, and SQL and machine learning frameworks like TensorFlow and PyTorch. They also use various data visualization tools like Tableau and Power BI to present their findings in an easy-to-understand format. As the sector advances, data scientists will play a crucial role in developing and implementing innovative solutions to solve complex problems.

If all of these sound exciting, a great way to start a data science career will be by joining a course that understands your needs.

Ivy Professional School is a great choice that provides students with the necessary skills and guidance to launch a successful career in analytics. Ivy starts from the basics and gradually moves to advanced concepts in analytics so that even beginners can easily grasp concepts.

Students are trained by faculty from elite institutions like IITs, IIMs, and US universities and exposed to real-world analytics problems through capstone projects, case studies, and internships. At Ivy Professional School, students receive support from teaching assistants to address their questions and concerns. Ivy offers sessions on building effective resumes and conducting mock interviews to prepare students for job opportunities. These resources help ensure that Ivy students are fully prepared and job-ready for future opportunities in data science.

Watch this video to get more tips on how to build a Career in Data Science

Team Apr 11, 2023 No Comments

Updated in May 2024

You have worked hard on your data science skills. You have analyzed datasets, built models, and maybe even told stories through data visualization. That’s great.

But when it’s time to find job opportunities, you have to show clients and hiring managers what you can really do. And they can’t read your mind and may not believe what you say.

That’s where you need a data science portfolio.

This portfolio is proof of your expertise. It showcases projects that convince potential employers that you are the ideal candidate for the job.

In this blog post, we will share some tips for building a data science portfolio that will make you stand out from the crowd and land your dream job.

A data science portfolio lists your projects and code samples to demonstrate your skills in action. Here, you provide your professional details to help potential employers decide whether you are the right candidate.

Building a portfolio is crucial for a data science career. Often this portfolio is what determines whether you get a job opportunity. It lets potential employers see your thought process, problem-solving skills, and what you can bring to the table.

You can build your portfolio either on your website or third-party platforms like Kaggle and GitHub. Your portfolio could include things like:

A portfolio shows your commitment to ongoing learning and development. Whether you are a beginner or a professional data scientist, a strong portfolio can make a world of difference in your career.

The data science job market is competitive. A thoughtful portfolio gives you a competitive advantage and helps you win job opportunities.

How does that happen? Well, the portfolio gives employers tangible evidence of your skills. You are not only saying you know data analysis but demonstrating it with completed projects. You know how the old proverb goes, actions speak louder than words.

Portfolio also lets you show your thought processes, creativity, and how you tackle real-world data problems. This adds a personal touch and helps you stand out from other candidates.

Even if you are a beginner who lacks work experience, a data scientist portfolio is all you need. It shows you are a skilled and passionate data scientist who is serious about his/her work. And this increases your chances of getting hired.

Here are some helpful tips that will help you build a thoughtful data science portfolio and get high-paying jobs in top MNCs.

Data science is a vast subject. It covers numerous sub-topics like machine learning, natural language processing, computer vision, data visualization, etc. While it’s great to be an all-rounder, trying to showcase everything in your portfolio can dilute the impact.

Instead, identify the areas of data science you hold expertise and are passionate about the most. Here are some specific areas you can focus on:

By choosing a niche, you play to your strengths and interests. You can go deeper into specific projects and showcase your expertise. This is more impressive than trying to be a jack-of-all-trades.

This also helps you find the right opportunities. If a potential employer is looking for someone specialized in natural language processing, and your portfolio highlights several NLP projects, you are a clear match.

Now, this doesn’t mean you have to stick to the same niche and can’t explore other areas later. As you gain experience, you can try other niches and change your portfolio. But when you are a beginner data scientist, focusing on a specific area helps a lot.

The About section tells who you are as a data scientist and frames how someone sees your work. Here, you can provide a brief and professional introduction to you, your skills, and passion for data science.

You can include the following details in the About section:

You can show a little personality in this section to make your portfolio different and more memorable. It will grab employer’s attention and make them consider your portfolio.

Pro tip: If you know the exact company you are targeting, adjust your data science portfolio to match the company’s requirements.

Time to show what you can do as a data scientist. Now, this doesn’t mean that you have to show every project you have ever done.

Just the best 2-4 projects in your selected niche that highlight your skills will do the job. The projects should be unique, creative, and challenging to impress the employers.

For each featured project, follow this structure:

Your data science portfolio should also show the code you used in the projects. You can do it with Jupyter Notebooks or GitHub repositories. This will help to showcase your ability to write clean, organized, and well-documented code.

For example, Vaishnav Bose, a student at Ivy Pro School, has shown different projects he has undertaken on GitHub.

You need the right people to find you and see your portfolio. An active online presence can help you do that. It can increase visibility to potential employers and attract the right opportunities.

You just have to talk about your projects and showcase your skills on online platforms. Here are some platforms you can try:

For example, Aritra Adhikari, an Ivy student, has written this medium post highlighting how he predicted customer lifetime value for an auto insurance company.

An online presence shows you are serious about your work. So, try to be consistent and keep sharing what you learn. You will see significant benefits within a year.

Pro tip: Link your data science portfolio to the bio of your profile on every platform. This will make it easy for people to discover your portfolio.

Building a data science portfolio is not a one-time thing. It’s a process. A never-ending process.

That means your portfolio should keep evolving as you advance in your career. You should keep adding new projects, update the old ones, and change the descriptions to reflect your current thought process.

As you gain experience, gather knowledge, master tools, and learn new skills, your portfolio should reflect that. It will show potential employers that you’re not stagnant but committed to your growth.

Also, get feedback from your peers and mentors to identify how your portfolio could be improved.

Your portfolio is a reflection of your expertise. It should show your journey of becoming a better data scientist.

A portfolio is a crucial element that can boost your career. That’s why Ivy Pro School helps students build an impressive portfolio in the Data Science and AI course.

This comprehensive course teaches you everything about data science, from data analytics, data visualization, and machine learning to Gen AI.

You get coached by IIT professors and industry experts working in Amazon, Google, Microsoft, etc. So, you can imagine how high the quality of teaching will be.

The course helps you complete 50+ real-world projects, including live industry capstone projects. This way, you not only gain hands-on experience but also build a solid data science portfolio that showcases your skills to potential employers.

Visit this page to learn more about Ivy’s Data Science and AI course.

Team Apr 08, 2023 No Comments

Analytics is an exciting career due to the high demand for professionals who can analyze data and provide insights,, the chance to work with cutting-edge tools and technologies, and the satisfaction of making a significant impact on businesses and organizations. Starting a career in

Team Apr 04, 2023 No Comments

A data engineer’s role involves designing, developing, and maintaining the systems and infrastructure necessary for processing, storing, and analyzing massive datasets. They oversee the creation and management of data pipelines, maintaining databases, ensuring the quality of data, and integrating diverse data sources. Data engineers are the backbone of data-driven organizations working on efficiently and effectively using data in decision-making processes.

With the ever-increasing amount of data being generated and collected by businesses, we are witnessing a growing demand for skilled data engineers. To tap into this industry and make a rewarding career in data engineering, here are some top courses that can kickstart your career as a data engineer.

Ivy equips students with all the skills to launch a successful data engineering career. Ivy Professional School’s Cloud Data Engineering certification course provides hands-on experience with real-life projects and case studies in Big Data Analytics and Data Engineering. The students are assisted by teaching assistants to clear their questions and doubts. Students have the option to attend live face-to-face or online classes. Ivy also provides lifetime placement support to its students. Ideally, the course takes 6 months to complete.

The course covers important topics such as:

The course will allow students to join the fast-growing and rewarding data industry. A student can learn from anywhere, as the option of joining live classes online is also available. The course also focuses on holistic development, providing students with essential job-oriented skills such as CV building, LinkedIn profile building, networking skills, and interview skills, among others.

This course provides information on various aspects of data engineering, including the principles, methodologies, techniques, and technologies involved. Students will learn how to design and build databases, manage their security, and work with various databases such as MySQL, PostgreSQL, and IBM Db2. Students will be exposed to NoSQL and big data concepts including practice with MongoDB, Cassandra, IBM Cloudant, Apache Hadoop, Apache Spark, SparkSQL, SparkML, Spark Streaming.

In this course, students will get all the necessary skills to build their data engineering career. Students will acquire knowledge related to various concepts such as creating data models, constructing data warehouses and data lakes, streamlining data pipelines through automation, and handling extensive datasets.

They will learn how to design user-friendly relational and NoSQL data models that can handle large volumes of data. Students will also acquire the skills to construct efficient and scalable data warehouses that can store and process data effectively. They will be taught to work with massive datasets and interact with cloud-based data lakes. Students will learn how to automate and monitor data pipelines, which involves using tools such as Spark, Airflow, and AWS.

Coursera offers a variety of courses in data engineering. The courses discuss all the skills needed to excel in a data engineer role. They discuss the various stages and concepts in the data engineering lifecycle. The courses teach various engineering technologies such as Relational Databases, NoSQL Data Stores, and Big Data Engines.

Just like Coursera, Udemy offers a plethora of courses in data engineering. Some popular ones include Data Engineering using AWS Data Analytics, Data Engineering using Databricks on AWS and Azure, Data Warehouse Fundamentals for Beginners, Taming Big Data with Apache Spark and Python – Hands On, among others.

Online data engineering courses provide an excellent opportunity to acquire new skills and knowledge Whether you are a beginner or an experienced professional, these courses can help you gain a deeper understanding of data engineering concepts, stay up-to-date with the latest trends and technologies, and accelerate your career.

Team Mar 27, 2023 No Comments

The never-ending rumors of OpenAI bringing out GPT-4 finally ended last week when the Microsoft-backed company released the much-awaited model. GPT-4 is being hailed as the company’s most advanced system yet and it promises to provide safer and more useful responses to its users. For now, GPT-4 is available on ChatGPT Plus and as an API for developers.

The newly launched GPT-4 can generate text and accept both image and text inputs. As per OpenAI, GPT-4 has been designed to perform at a level that can be compared to humans across several professional and academic benchmarks. The new ChatGPT-powered Bing runs on GPT-4. GPT-4 has been integrated with Duolingo, Khan Academy, Morgan Stanley, and Stripe, OpenAI added.

This announcement follows the success of ChatGPT, which became the fastest-growing consumer application in history just four months ago. During the developer live stream, Greg Brockman, President and Co-Founder of OpenAI Developer Livestream that OpenAI has been building GPT-4 since they opened the company.

OpenAI also mentioned that a lot of work still has to be done. The company is looking forward to improving the model “through the collective efforts of the community building on top of, exploring, and contributing to the model.”

So, what makes GPT-4 stand out from its predecessors? Let us find out:

One of the biggest upgrades for GPT-4 has been its multimodal abilities. This means that the model can process both text and image inputs seamlessly.

As per OpenAI, GPT-4 can interpret and comprehend images just like text prompts. Any specific type or image size does not bind this feature. The model can understand and process all kinds of images- from a hand-drawn sketch, a document containing text and images, or a screenshot.

OpenAI assessed the performance of GPT-4 on traditional benchmarks created for machine learning models. The findings have shown that GPT-4 surpasses existing large language models and even outperforms most state-of-the-art models.

As many ML benchmarks are written in English, OpenAI sought to evaluate GPT -4’s performance in other languages too. OpenAI informs that it used Azure Translate to translate the MMLU benchmark.

Image: OpenAI

OpenAI mentions that in 24 out of 26 languages tested, GPT-4 surpassed the English-language performance of GPT-3.5 and other large language models like Chinchilla and PaLM, including for low-resource languages like Latvian, Welsh, and Swahili.

To differentiate between the capabilities of GPT-4 and GPT-3.5, OpenAI conducted multiple benchmark tests, including simulating exams originally meant for human test-takers. The company utilized publicly available tests like Olympiads and AP free response questions and also obtained the 2022-2023 editions of practice exams. We did not provide any specific training for these tests.

Here are the results:

Image Source: OpenAI

OpenAI dedicated six months to enhancing GPT-4’s safety and alignment with the company’s policies. Here is what it came up with:

1. According to OpenAI, GPT-4 is 82% less likely to generate inappropriate or disallowed content in response to requests.

2. It is 29% more likely to respond to sensitive requests in a way that aligns with the company’s policies.

3. It is 40% more likely to provide factual responses compared to GPT-3.5.

OpenAI also mentioned that GPT-4 is not “infallible” and can “hallucinate.” It becomes incredibly important to not blindly rely on it.

OpenAI has been at the forefront of natural language processing advancements, starting with their GPT-1 language model in 2018. GPT-2 came in 2019. It was considered state-of-the-art at the time.

In 2020, OpenAI released its latest model, GPT-3 which was trained on a larger text dataset. It led to improved performance. Finally, ChatGPT came out a few months back.

Generative Pre-trained Transformers (GPT) are learning models that can produce text with a human-like capability. These models have a wide range of applications, including answering queries, creating summaries, translating text to various languages (even low-resource ones), generating code, and producing various types of content like blog posts, articles, and social media posts.

Team Mar 25, 2023 No Comments

Staying up-to-date with the latest happenings in data science is crucial due to the field’s rapid growth and constant innovation. Beyond conventional ways to stay updated and get information, podcasts can be a fun and convenient way to access expert insights and fresh perspectives. They can also provide crucial information to help you break into a data science career or advance it successfully.

Here is a list of some popular podcasts any data enthusiast cannot afford to miss this year.

Michael Helbling, Tim Wilson, and Moe Kiss are co-hosts who discuss various data-related topics. Lighthearted in nature, the podcast covers a wide range of topics, such as statistical analysis, data visualization, and data management.

Professor Margot Gerritsen from Stanford University and Cindy Orozco from Cerebras Systems are the hosts, and it features interviews with leading women in data science. The podcast explores the work, advice, and lessons learned by them to understand how data science is being applied in various fields.

Launched in 2014 by Kyle Polich, a data scientist, this podcast explores various topics within the field of data science. The podcast covers machine learning, statistics, and artificial intelligence, offering insights and discussions.

Not So Standard Deviations is a podcast hosted by Hillary Parker and Roger Peng. The podcast primarily talks about the latest advancements in data science and analytics. Staying informed about recent developments is essential to survive in this industry, and the podcast aims to provide insights that will help listeners to do that easily. By remaining up-to-date with the latest trends and innovations, listeners can be in a better position to be successful in this field.

Hosted by Xiao-Li Meng and Liberty Vittert, the podcast discusses news, policy, and business “through the lens of data science,” Each episode is a case study of how data is used to lead, mislead, and manipulate, adds the podcast.

Data Stories hosted by Enrico Bertini and Moritz Stefaner is a popular podcast exploring data visualization, data analysis, and data science. The podcast features a range of guests who are experts in their respective fields and discuss a wide variety of data-related topics, including the latest trends in data visualization and data storytelling techniques.

Felipe Flores, a data science professional with around 20 years of experience hosts this podcast. It features interviews with some of the top data practitioners globally.

Dr.Francesco Gadaleta hosts this podcast. It provides the latest and most relevant findings in machine learning and artificial intelligence, interviewing researchers and influential scientists in the field. Dr. Gadaleta hosts the show on solo episodes and interviews some of the most influential figures in the field.

Making Data Simple is a podcast that is hosted by AL Martin, the IBM VP of Data and AI development. The podcast talks about the latest developments in AI, Big data, and data science and their impact on companies worldwide.

Hosted by Emily Robinson and Jacqueline Nolis, the podcast provides all the knowledge needed to succeed as a data scientist. As per the website, the Build a Career in Data Science podcast teaches professionals diverse topics, from how to find their first job in data science to the lifecycle of a data science project and how to become a manager, among others.

Discretion: The list is in no particular order.

Team Mar 21, 2023 No Comments

A mere century ago, no one could have imagined we would be so reliant on technology. But, here we are, constantly being introduced to some of the smartest, trendiest, and mind-boggling automation procedures. The modern world will come to a screeching halt without the intervention of up-to-the-minute software, framework, and tools. TinyML is the new addition to the category of up-to-date technologies and telecommunications.

There are very few authentic resources available that put light on TinyML. TinyML: Machine Learning with TensorFlow Lite on Arduino and Ultra-Low-Power Microcontrollers, authored by Daniel Situnayake and Pete Warden, is a prestigious and reliable source that answers the question: ‘what is TinyML?’. TinyML is an advancing field that combines Machine Learning and Embedded Systems to carry out quick instructions on limited memory and low-power microcomputers.

Another important feature of TinyML – the only machine learning framework it supports is TensorFlow Lite. Not sure what TensorFlow is? Check the detailed guide on TensorFlow .

Waiting long for machine learning magic is not a pleasant experience in every situation. When regular Machine Learning comes across commands like ‘Okay Google’, ‘Hey Siri’, or ‘Alexa’, the response can be time-intensive. But, the goal is quick responses from small directions like these. The desired fast reaction is only possible when the TinyML application is in effect.

It’s time to dive deep into the discussion of TinyML:

What is TinyML?

TinyML is a specialized study of Machine Learning that sits in the middle of embedded systems and machine learning (ML). It enables expansion and disposition of complex ML models on low-power processors that have limited computational abilities and memory.

TinyML allows electronic accessories to overcome their shortcomings by gathering information about the surroundings and functioning as per the data collected by ML algorithms. It also enables users to enjoy the benefits of AI in embedded tools. The simplest answer to ‘what is TinyML?’: TinyML is a framework to safely transfer knowledge and intelligence in electronic devices using minimal power.

The rapid growth in the software and hardware ecosystems enables TinyML application in low-powered systems (sensors). It warrants a real-time response, which is highly in demand in recent times.

The reason behind the growing popularity of TinyML in the real world is its ability to function perfectly fine without necessitating a strong internet connection, and massive monetary and time investment. It is rightly labeled as a breakthrough in the ML and AI industry.

TinyML has successfully addressed the shortcomings of standard Machine Learning (ML) models. The usual ML system cannot perform its best without entailing massive processing power. The newest version of ML is ready with its superpower to take over the industry of edge devices. It does not disappoint by demanding manual intervention such as connecting the tool to a charging point just to process simple commands or perform small tasks.

The application enables the prompt performance of minute but integral functions while eliminating massive power usage. A father figure in the TinyML industry, Pete Warden says, TinyML applications should not necessitate more than 1 mW to function.

If you are not well-versed in the basic concept of machine learning, our blog might help you understand it better.

New-age data processing tools and practices (Data Analytics, Data Engineering, Data Visualization, Data Modeling) have become mainstream due to their ability to offer instant solutions and feedback.

TinyML is solely based on data computing ability; it’s just faster than others. Here are a few uses of TinyML that we all are familiar with but probably, were not aware of the technology behind these:

Usually, a user anticipates an instant answer or reaction from a system/device when a command is stated. But a thorough process involving the transmission of instructions to the server and device capacitates the outcome. As one can easily fathom that this long process is time-consuming and thus the response gets delayed sometimes.

TinyML application makes the entire function simple and fast. Users are only concerned with the response; what goes inside does not pique the interest of many. Modern electronic gadgets that come with an integrated data processor are a boon of TinyML. It encourages the fast reaction that customers are fond of.

The exhaustive system of data management, transmission, and concocting can be intense. It also accelerates the risk of data theft or leak. TinyML safeguards user information to a great extent. How? The framework allows data processing in the device. The growing popularity of Data Engineering has also skyrocketed the need for safe data processing. From an entirely cloud-based data processing system to localized data processing, data leak is not a common problem for users anymore. TinyML erases the need to secure the complete network. You can now get away with just a secured IoT device.

A comprehensive server infrastructure is an ultimate foundation to ensure safe data transfer. As TinyML reduces the need for data transmission, the tools also consume less energy compared to the models manufactured before the popularity of the field. The common instances where TinyML is in use are microcontrollers. The low-power hardware uses minimal electricity to perform its duties. Users can go away for hours or days without changing batteries, even when they are in use for an extended period.

Regular operations using ML demand a strong internet connection. But, not anymore when TinyML is in action. The sensitive sensors seize information even without an internet connection. Thus, no need to worry about data delivery to the server without your knowledge.

Though it’s almost perfect, but not free from flaws. When the world is fascinated by the potential of TinyML and constantly seeking answers to ‘what is TinyML?’; it’s important to keep everyone informed of the challenges the framework throws at users. Combing through the internet and expert views, a few limitations of TinyML have been listed here:

Regular ML models use a certain amount of power that industry experts can predict. But TinyML does not leverage this advantage as each model/device uses different amounts of electricity. Thus, forecasting an accurate number is not possible. Another challenge users often face is an inability to determine how fast they can expect the outcome of commands on their device.

The small size of the framework also limits the memory storage space. Standard ML models weed out such complications.

The current retail chains manually monitor the stocks. The precision and accuracy of state-of-the-art technologies (such as TinyML) deliver better results compared to human expertise. Tracking inventories becomes straightforward when tinyML is in action. The introduction of footfall analytics and TinyML has transformed the retail business.

TinyML can be a game-changer for the farming industry. Whether it’s a survey of the health of farm animals or sustainable crop production, the possibilities are endless when the latest technologies are combined and adopted.

The smart framework expedites factory production by notifying workers about necessary preventative maintenance. It streamlines manufacturing projects by implementing real-time decisions. It makes this possible by thoroughly studying the condition of the equipment. Quick and effective business decisions become effortless for this sector.

TinyML application simplifies real-time information collection, routing, and rerouting of traffic. It also enables fast movement of emergency vehicles. Ensure pedestrian safety and reduce vehicular emissions by combining TinyML with standard traffic control systems.

Experts believe we have a long way to go before we can claim TinyML as a revolutionary innovation. However, the application has already proved its ability and efficiency in the machine learning and data science industry. With an answer to the question ‘what is TinyML?’, we can expect the field to advance and the community to grow. The day is not far away when we will witness the application’s diverse implementation that none has envisaged. TinyML is ready to go mainstream with the expansion of supportive programming tools.

If you are someone with immense interest in the AI, ML, and DL industry, our courses might uncover new horizons and job opportunities for you. Check the website of Ivy Professional School to enroll in our training programs!

Team Mar 15, 2023 No Comments

Finally, GPT-4 is here – It can now take images, videos, and text inputs and generate responses.

The wait for the much anticipated GPT-4 is over.

Microsoft-backed OpenAI has revealed the launch of its highly anticipated GPT-4 model. It is being hailed as the company’s most advanced system yet, promising safer and more useful responses to its users. Fo